This blog post will guide you to build a Conversational Social Robot that uses speech, voice, facial expressions and gestures for communication.

In recent years, conversational AI has taken the world by storm, driving researchers and developers to explore innovative ways to integrate language understanding into our lives. Large transformer-based language models have been a breakthrough in natural language processing and have paved the way for new advancements. Over 100 such models are available for experiments, such as Open Assistant, ChatGPT, Koala, Vicuna, FastChat-T5, MPT-Chat, and many others. Some are available via the so-called playground like https://chat.lmsys.org, while others provide services for specialized content production, using an underlying large language model, such as https://copy.ai and https://www.perplexity.ai/. Additionally, OpenAI models offer API access. Conversational Social Robot.

However, a powerful language model alone isn’t sufficient for social robotics application. Effective communication with social robots involves speech, voice, emotions, and body language. QTrobot from LuxAI facilitates the seamless integration of the latest language technologies, and is now equipped with the power of ChatGPT for language understanding and generation.

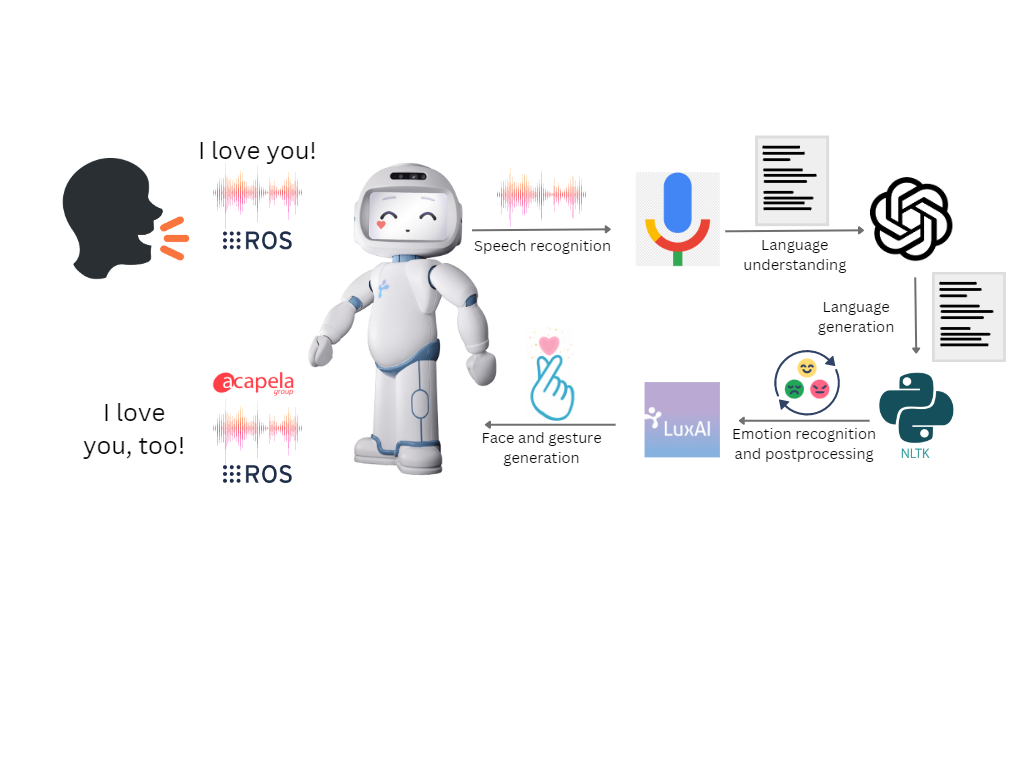

The figure below displays the basic architecture to build a conversational social robot, using QTrobot and ChatGPT. The code is available on LuxAI GitHub.

Now, let’s delve into each part of the implementation in detail!

Online speech recognition

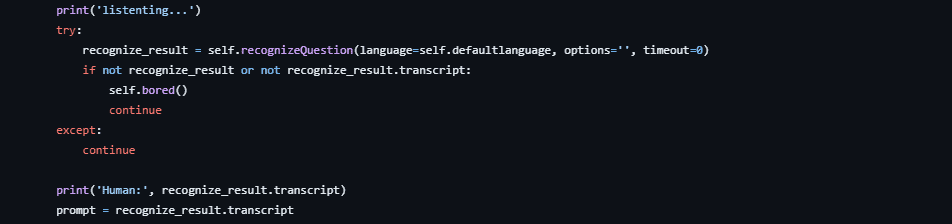

Large language models work with text inputs, also called prompts. Our AI Chatbot will be using online speech recognition. The advantage of it is that the voice can be analyzed as a stream, the user can speak as long as they need, and the transcription of the speech is quite fast. The disadvantage of using Google Speech is that the language of communication needs to be known in advance. The output of the speech recognizer is a transcription of the speech which we can use as our prompt.

For that, we created Google speech recognition wrapper pre-installed on QTrobot. To this wrapper work, you will need to set up a Google Cloud account, get the Google API credentials, and run the Google speech app. Detailed instructions for setting up the account and getting the API credentials can be found in this link.

All we need to do is define and call the Google Speech ROS service as below:

The parameter language can be set to any other language that Google Speech covers. Any other speech recognition service for any language can be used instead of Google Speech, just try it out!

Language understanding and generation with OpenAI GPT models

OpenAI’s GPT (Generative Pre-trained Transformer) is a language model that uses machine learning to generate natural language text. GPT can complete sentences, paragraphs, and entire articles, based on the large amount of data it has been trained on. GPT has been used in various applications, including chatbots, content generation, and text summarization.

We will use GPT to generate responses from speech recognition transcripts.

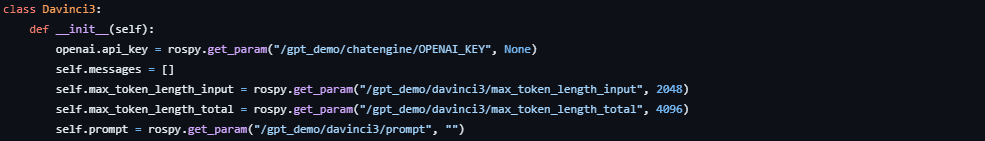

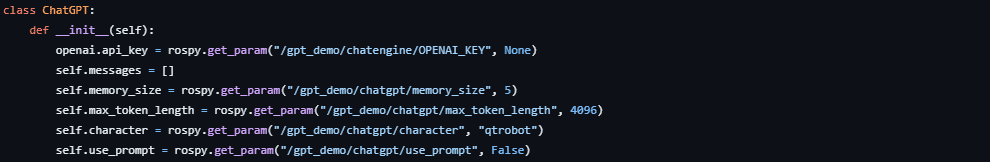

To make this task simple, we have created two Python classes for OpenAI models: one utilizing the “text-davinci” model and another for the “gpt3.5-turbo” model. We have implemented history tracking and included a system message for GPT to give QTrobot a personality, as shown below. You can check the full code at this link and read more about OpenAI here.

Davinci3 Class:

GPT3.5 Class:

The system_message stores one part of the prompt that helps to create the identity of the robot. The recognised transcript of the speech is attached to it and both together form a prompt that is sent to the language understanding and generation model, GPT in this example.

We can now call OpenAI to get a generated response from the GPT model.

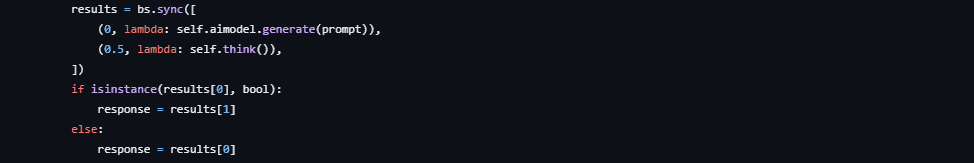

To make this simple example work nicely, we have created the TaskSynchronizer, a python class that helps to synchronise speech processing and language generation. You can learn more about it here.

Postprocessing, emotion recognition and embodied response

Emotions and sentiments are analysed at two points:

- The user’s input is analyzed so that the chatbot can express empathy based on the user’s emotions and feeling detected;

- The response from the language model is analyzed in order to embody the emotional expressions detected in the generated language.

We use NLTK and text2emotions for the analysis of emotions, sentiment. The response from the language model is also analysed in term of sentence structure and keywords. In each sentence, we analyse keywords and add some gestures to the robot output accordingly. For example, if there is a ‘yes’ in one of the sentences, we would like QTrobot to nod with the head, if we detect a ‘no’, the robot would shake the head showing an embodied negative response. While we have implemented this feature for a few selected keywords, you have the flexibility to extend it to any word you like and make the model for embodiment of the generated textual response more complex.

With the recognised emotions and sentiments, we can use QTrobot’s facial expressions, gestures and text-to-speech to generate an multimodal response to the user’s prompt.

This is a very simple approach, and you can easily try out your own with a more complex models of emotions and postprocessing!

Multiturn conversations with QTrobot

After QTrobot shows and says the entire response, we call the Google Speech ROS service again and repeat the entire process. This enables you to have endless conversations with QTrobot, using OpenAI’s GPT model.

Giving the robot an identity

Speaking from different social roles in different social situations requires speakers to behave and to speak differently. We can achieve these differences in the robot’s behavior by writing prompts that make a language model to generate language that is closer to the desired identity. For example, the robot can be a tourist guide or a teacher’s assistant, it can be an astronaut who just returned from space or an artificial companion that helps learners of a foreign language to practice conversation. Writing proper prompts is an art! You can find some advice on how to write good prompts here: https://learnprompting.org/ and here: https://www.promptingguide.ai/.

In our GitHub repository, you can find five pre-configured characters for the ‘gpt-3.5-turbo’ model: qtrobot, fisherman, astronaut, therapist and gollum. If you want to try them out, you just need to change the character parameter on line 12 in the ‘gpt_bot.yaml’ file and have fun talking to them.

To use custom character prompt you can change the parameter ‘use_custom’ to true and write your ‘prompt’ parameter instead of the template that you find in the ‘gpt_bot.yaml’. QTrobot will then take your prompt to trigger responses from the GPT model.

Conclusion and further directions

Now that you have seen how to build a conversational social robot assistant using Google Speech Recognition and OpenAI’s GPT model, you can access the full code in our GitHub repository. Feel free to use it as a foundation and add as many features as you would like to customize your chatbot!

A few ideas how to extend the app:

- Try out other models and different prompts! Different LLMs have different strengths and weaknesses, and it is a trial-and-error process to figure out which one would be working best for your use case and with the prompts that you want.

- Try out other speech recognition services and other languages. Our example works with English, but there are 7000 languages in the world!

- Try to modify the responses of the generative model to make them more oral. For example, if you prompt the model with something like “You are an astronaut. What do you feel when you come back to earth?” you will receive a long text saying something like

“Physically, astronauts often feel a sense of heaviness due to Earth’s gravity after experiencing weightlessness for an extended period. This can result in muscle soreness, joint stiffness, and a general feeling of fatigue. The body needs time to readjust to the effects of gravity and regain its normal strength and coordination. Emotionally, astronauts may experience a mix of emotions upon returning to Earth. They may feel a sense of relief and accomplishment for successfully completing their mission and reuniting with loved ones. At the same time, there can be a sense of nostalgia and longing for the unique experiences they had in space. Some astronauts describe a feeling of awe and a new appreciation for Earth’s beauty and fragility after seeing it from the perspective of space.”

How about adding some ‘uhms’, pauses, backchannelling behaviours to make it sound more oral? For example:

“Physically, astronauts often feel kind of a sense of heaviness coz the Earth’s gravity’s back again after a long period of weightlessness. Uhm… Sometimes we feel a sort of … muscle soreness, joint stiffness, and feel generally tired. The body needs time to readjust to the effects of gravity and regain its normal strength and coordination. You know? Emotionally, it is like a mix of emotions …”

- Try to play with speech modifiers like pace and pitch to express different emotions.

- Try to adjust gestures to specific cultures and social groups.

- Try to combine language generation with a knowledge base to give the model your domain knowledge.

About LuxAI and QTrobot

LuxAI is the founder, developer, and manufacturer of QTrobot and distributes QTrobots to countries around the world. QTrobot platform for research and development combines the best-in-the-market hardware components with a friendly design. QTrobot is a robust platform suitable for intensive working hours and multi-disciplinary research projects on social robotics and human-robot interaction. That makes QTrobot the ideal companion for researchers and developers in the field of social robotics.

QTrobot is a humanoid social robot with extensive capabilities to be used for research and development. QTrobot is a helpful tool in delivering best practices in child education, especially for children with autism and special educational needs. Being a robust platform with extensive built-in features, QTrobot can be used in many ways to support education and conducting research projects.