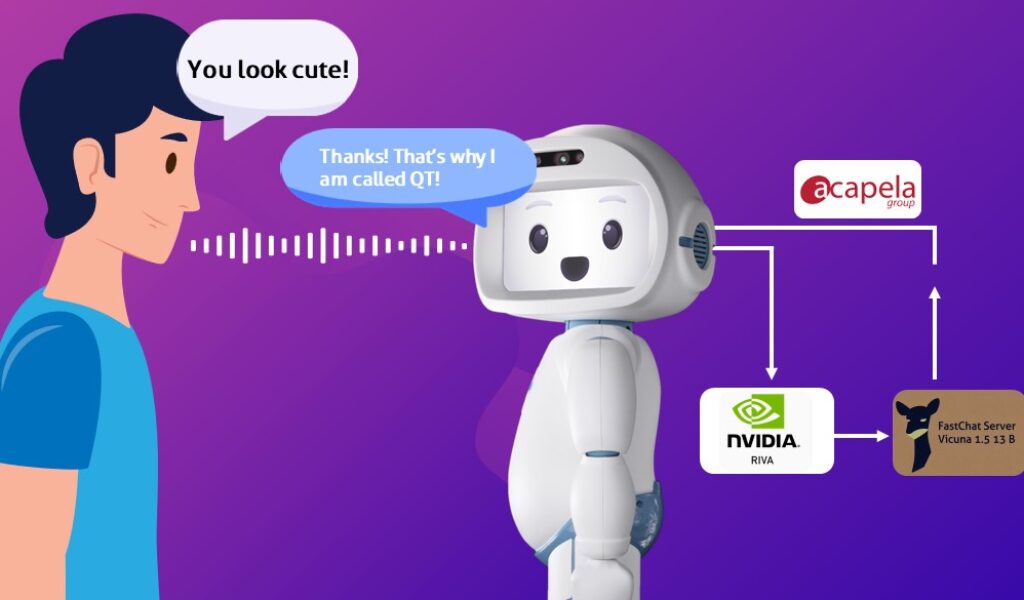

This blog post will guide you in developing a completely offline conversational social robot running Nvidia Riva speech recognition, and Vicuna-13b large language model (UC Berkeley) trained by fine-tuning Llama-II (Meta), on the QTrobot AI@Edge’s Nvidia computing board!

In this blog, we’ll talk about:

- Disadvantages of relying on cloud-based services such as OpenAI and Google Speech for interaction and conversation in human-robot interaction and social robotics

- How you can run conversation software directly on QTrobot AI@Edge locally and offline, and how to add character and personally to the way that robot makes the conversation

Disadvantages of building conversational robots using cloud-based services such as OpenAI and Google Speech

Amazing social robot interactions with QTrobot can be created and deployed nowadays using automated speech recognition (ASR) and natural language understanding and generation over API, such as Google Speech and OpenAI language models (check out here how to do it if you have not yet done it). However, in some scenarios it is not possible to have a stable Internet connection, and in some other use cases it is not desirable due to privacy or trust issues. In addition, delays in API requests due to network or model latency may decrease the fluency of interaction. The newest QTrobot by LuxAI provides a solution!

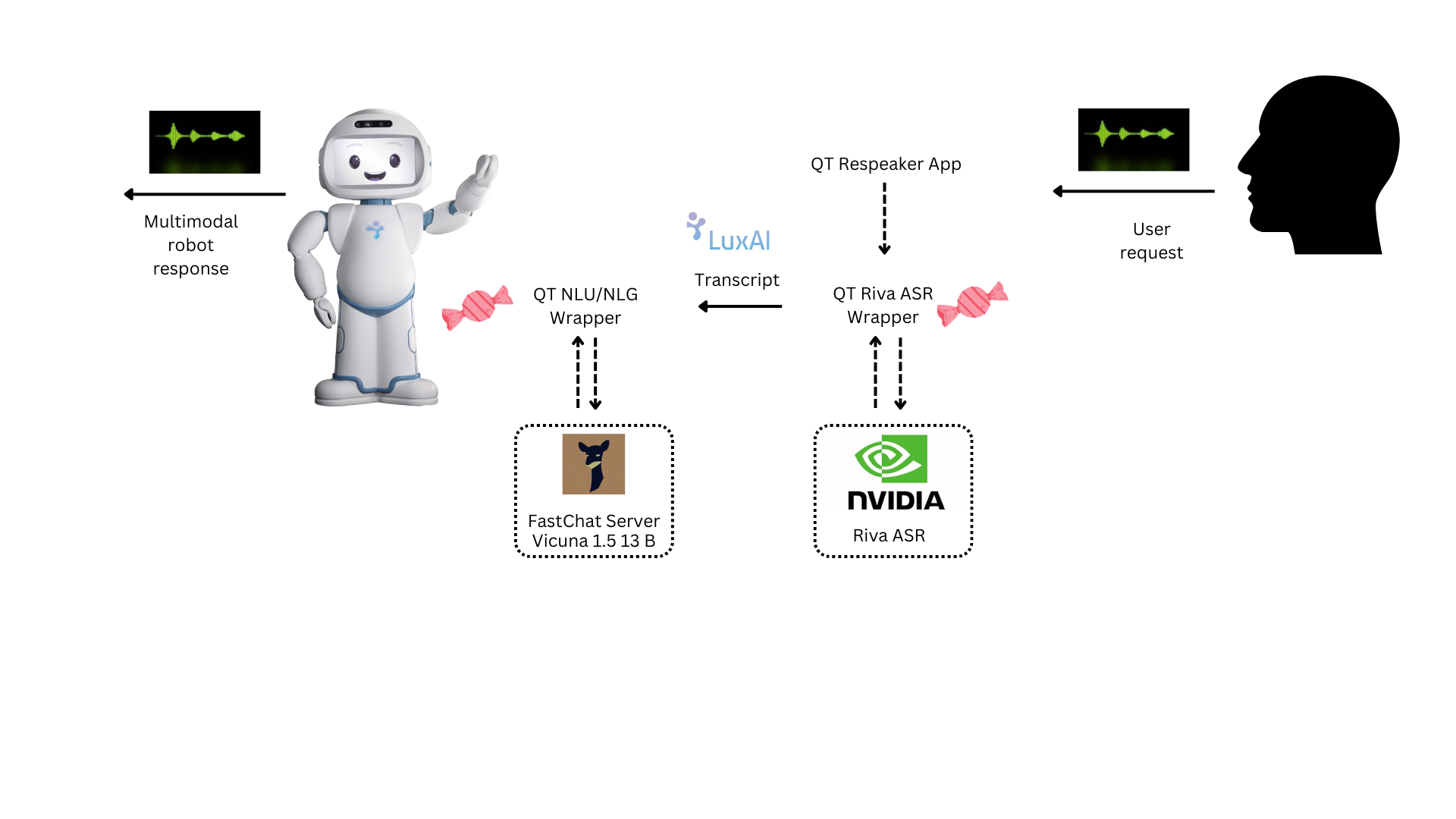

Running the conversational AI pipeline locally on the QTrobot AI@Edge

NVIDIA Riva ASR is a powerful multilingual software development kit accelerated by the latest NVIDIA GPU (Jetson AGX Orin) that boosts the performance of the newest QTrobot. Riva ASR runs smoothly on the QTrobot, and there is minimal GPU resource consumption noticed. The Riva SDK is provided through a container server and can be accessed from other devices as well. The SDK (container) is delivered after registration on the NVIDIA NGC hub and requires an API key setup to download the SDK.

Vicuna v1.5 13B is developed by LMSYS (UC Berkeley) by fine-tuning the LLaMA 2 (Meta) large language model for chat conversations based on user-shared conversations from ShareGPT. The model can be used according to the LLaMA 2 license agreement. The model runs smoothly on NVIDIA Jetson Orin GPU integrated with the new QTrobot, and consumes 28 GB of GPU memory. In our example, we use a FastChat server working locally on QTrobot to make the best out of the language processing capabilities of Vicuna model.

We developed a set of wrappers to connect these powerful models with ROS and QTrobot, you can check them out on LuxAI tutorial GitHub repository (QTrobot Offline Conversation). However, you are free to try out other models locally to make the most out of the GPT power.

Now you can deploy state of the art language models in

a trustful, privacy-controlled and network-independent way!

Installing NVIDIA Riva ASR software:

We have developed a Riva ASR wrapper for QTrobot, known as the QT Riva ASR App. This wrapper receives raw streamed audio from the QT Respeaker App and utilizes the NVIDIA Riva SDK to transcribe the audio. The QT Riva ASR App is already pre-installed on QTrobot AI@Edge. However, to use the NVIDIA Riva SDK, you need to download and install it using your API key. Here are some quick steps to obtain an API key and install the SDK:

- Sign in to your Nvidia NGC portal. (signup if you do not have an account)

- Follow the instruction for Generating Your NGC API Key to obtain an API key.

- Use your API key to login to the NGC registry by entering the following command and following the prompts. (ngc cli is already installed on the QTrobot.)

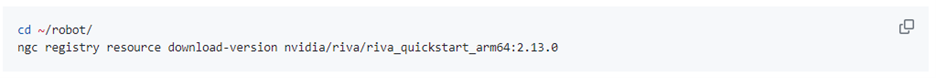

- Download the Embedded Riva start scripts in ~/robot folder using following command:

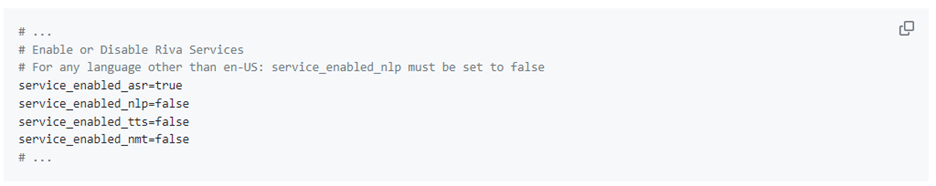

- Modify config.sh in ~/robot/riva_quickstart_arm64_v2.13.0/config.sh to disable unnecessary services and enable ASR. The relevant part in config.sh should look like this:

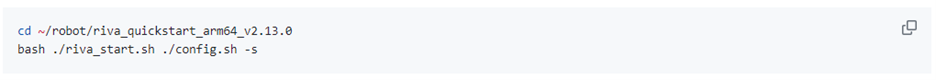

- Initialize the Riva ASR container. This may take some time, depending on the speed of your Internet connection.

After having Riva SDK installed on your QTrobot, you are ready to lunch the offline conversation demo as follows:

- First, starts Riva ASR container and wait until it loaded. It may takes 1 to 2 minutes:

- Lunch qt_riva_asr_app node for offline speech recognition:

- Finally run the offline_conversation node. This also automatically starts the Fastchat server with vicuna-13b-v1.5 model. It make take up to a minute to load the model in GPU.

Giving the robot an identity

Speaking from different social roles in different social situations requires speakers to behave and to speak differently. We can achieve these differences in the robot’s behavior by writing prompts that make a language model to generate language that is closer to the desired identity. For example, the robot can be a tourist guide or a teacher’s assistant, it can be an astronaut who just returned from space or an artificial companion that helps learners of a foreign language to practice conversation. Writing proper prompts is an art! You can find some advice and tips on how to write good prompts in A complete guide to build a Conversational Social Robot with QTrobot and ChatGPT. To use custom character prompt you can edit the `prompt` parameter in `offline_conversation.yaml` config file and restart the offline conversation demo. QTrobot will then take your prompt to trigger responses from the Vicuna model.

Conclusion and further directions

After seeing how to create an offline conversational social robot assistant using NVIDIA Riva ASR and the Vicuna/LLaMA 2 model, you can access the complete code in our GitHub repository. Feel free to use it as a foundation and add as many features as you’d like to customize your chatbot! For additional ideas on extending the app, you can read our previous blog post titled ‘A Comprehensive Guide to Building a Conversational Social Robot with QTrobot and ChatGPT.‘

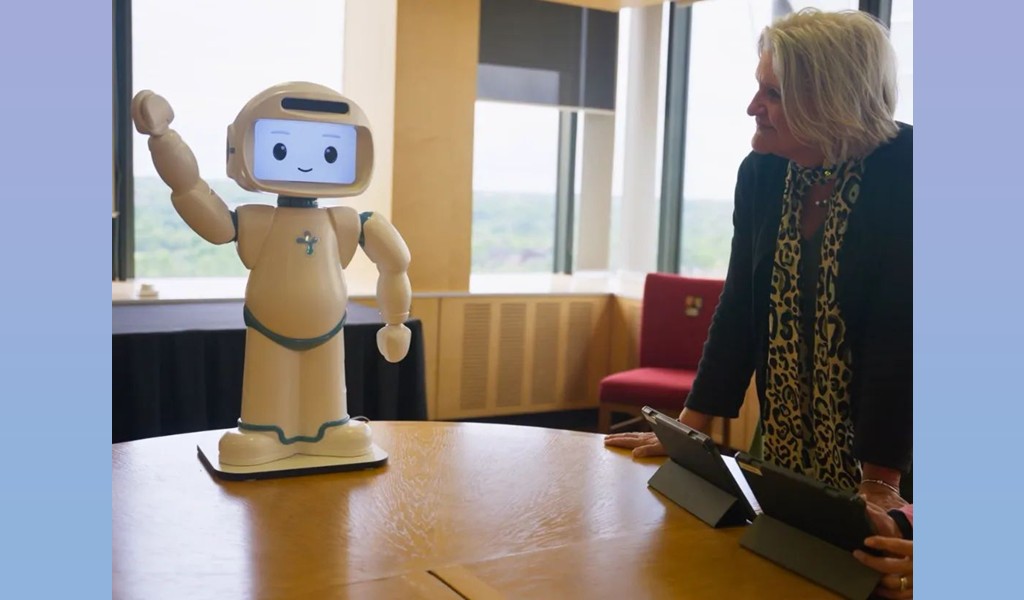

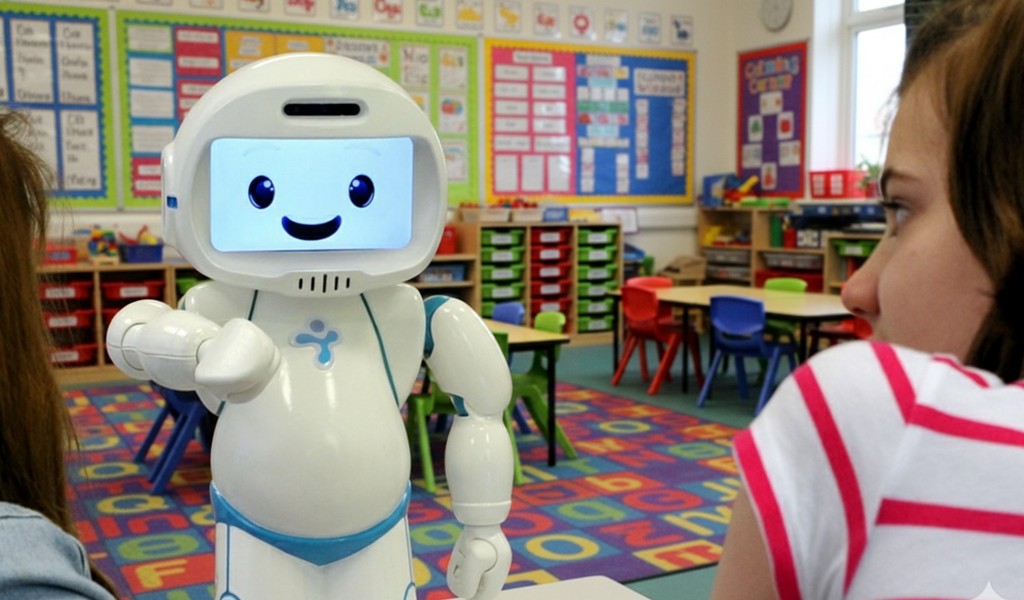

About LuxAI and QTrobot

LuxAI is the founder, developer, and manufacturer of QTrobot and distributes QTrobots to countries around the world. QTrobot platform for research and development combines the best-in-the-market hardware components with a friendly design. QTrobot is a robust platform suitable for intensive working hours and multi-disciplinary research projects on social robotics and human-robot interaction. That makes QTrobot the ideal companion for researchers and developers in the field of social robotics.

QTrobot is a humanoid social robot with extensive capabilities to be used for research and development. QTrobot is a helpful tool in delivering best practices in child education, especially for children with autism and special educational needs. Being a robust platform with extensive built-in features, QTrobot can be used in many ways to support education and conducting research projects.