Abstract:

Robots used in therapeutic scenarios, such as therapy for individuals with Autism Spectrum Disorder (ASD), often engage in imitation learning activities where a person mimics the robot’s motions. To facilitate the integration of new types of motions that a robot can perform, it is advantageous for the robot to learn motions by observing human demonstrations, such as those from a therapist.

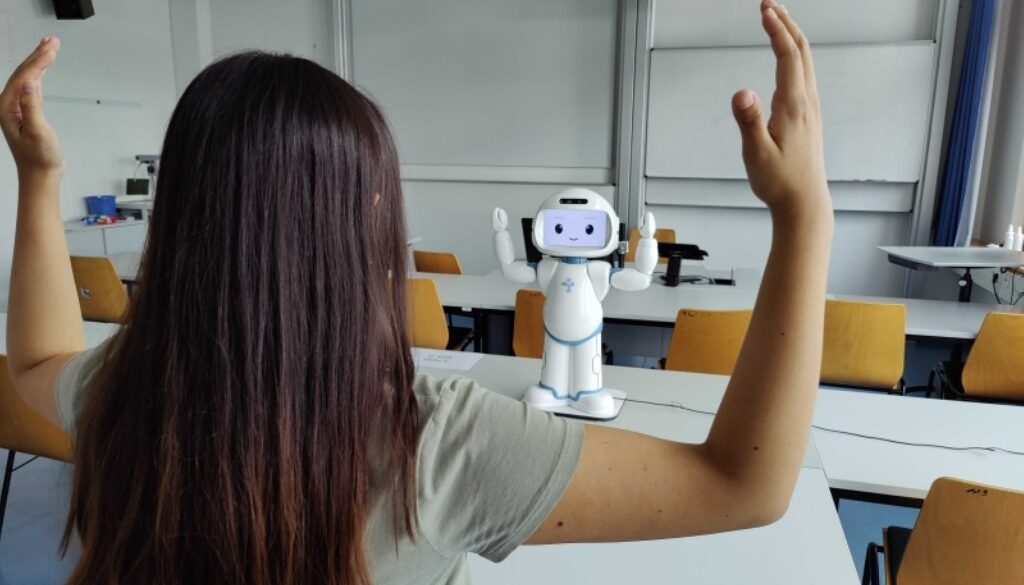

This paper investigates an approach for acquiring motions from human skeleton observations collected by a robot-centric RGB-D camera. Given a sequence of joint observations, the joint positions are mapped to match the robot’s configuration and executed by a PID position controller. The method’s effectiveness, particularly the reproduction error, was evaluated in a study where QTrobot acquired various upper-body dance moves from multiple participants. The results demonstrate the method’s overall feasibility but also highlight that noise in the skeleton observations can impact reproduction quality.

Reference:

Quiroga, Natalia, Alex Mitrevski, and Paul G. Plöger. “Learning Human Body Motions from Skeleton-Based Observations for Robot-Assisted Therapy.” arXiv preprint arXiv:2207.12224 (2022).